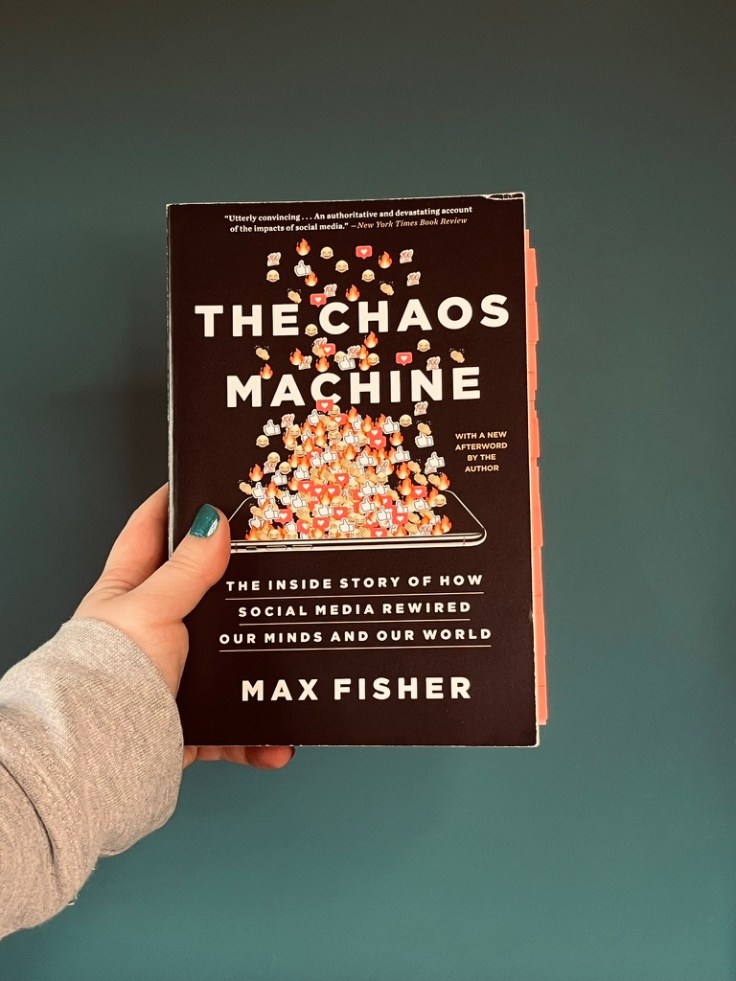

Author: Max Fisher

Rating: ⭐️⭐️⭐️⭐️⭐️

In this book, Max Fisher explores the impacts of social media on our brains and our social connections and on our social and political structures. Most of these impacts are clearly negative, and while I’m sure many social media companies would argue that their products have positive impacts as well, it’s worth us all considering if they are worth the negative effects on our wellbeing, relationships and social and political structures.

Fisher paints a picture that is a warped contrast to the one that big tech companies have been constructing for years—that they are here to provide us all free and easy ways to connect with each other and build a better future.

In early chapters, Fisher discusses the use of Facebook in spreading misinformation in Myanmar that lead to violence against the Rohingya by the military. He also discusses similar mobs that formed in Sri Lanka based on rumours spread on Facebook despite these posts being repeatedly reported by civil society groups and pleas from the Sri Lankan government for Facebook to counteract the work the algorithmic influence. When Facebook would not respond, the government had to resort to shutting down the site in order to stop the violence. It worked, and it also got Facebook’s attention finally as they noticed their traffic had gone down.

Both episodes were allegedly aggravated both by Facebook’s apparent disinterest in the impacts of their product on countries in which they barely had a business presence and a lack of moderators, particularly ones who spoke the local languages in which hate was being spread.

Fisher also talks about how YouTube is a master at determining users’ preferences and encircles them in bubbles of content related to those they have already engaged with. The problem is that these bubbles, while relatively insular, can take entry points like gaming and slowly lead people down rabbit holes of radicalisation (i.e. starting with videos about video games and after leaving on the auto-play monitored by YouTube’s algorithm, ending up in the realm of far-right channels or going from educational videos from actual doctors to conspiracy videos from anti-vaxxers). This seems to be particularly true for people who already have right-leaning political beliefs.

The key metric for recommendations algorithms is engagement – likes, shares, views, comments, anything that suggests that people are watching and engaging with the content. Whether that attention spurs positive or negative emotions doesn’t matter, the point is that it stimulates emotions. And negative content is more likely to generate emotions than positive for most of us, even if it’s untrue, especially as untrue information can often be outrageous in the extreme. But therein lies the problem with algorithms: they can’t determine truth. We know this from all the stories we’ve gotten about Chat GPT making up facts. But our human compulsion to shame and punish people who we perceive to have stepped outside the bounds of social and moral norms, can lead to rapid-fire spread of information around social media networks, which can lead to real-life consequences for people, including physical threat.

Metrics matter for any company or product. How you measure success will influence how you shape and develop your products and how willing you are to put in safeguards against potential harms. Social media companies prioritise engagement because it leads people to stay on the platform for longer, which means more scrolling, and more money they will make from selling ads, which is their core source of revenue.

For a long time, because these digital platforms were not part of our physical world, I think it didn’t occur to many of us that they could have impacts on our health and wellbeing or certainly something as significant as our democracies. Fisher constructs a comprehensive picture of these impacts and more by talking to researchers, whistle-blowers, victims of misinformation and current and former social media company employees, many of whom have spoken out against what they have recognised as the danger of the recommendation algorithms as these companies have developed them as well as the internal culture of the businesses that allegedly supress and ignore internal research that shows the harms of their products.

This was an engaging (and sometimes infuriating) read, so I’ll make sure to like, comment and share, to ensure that this book gets the attention it deserves.

Leave a comment